Putting Generative AI to Work - Conference

Pack Publications ran a 3 day virtual conference on the topic Put Generative AI to Work from October 11-13. I was fortunate enough to attend the conference. Here are the main learnings / take-aways.

[Source: Bing Generate]

[Source: Bing Generate]

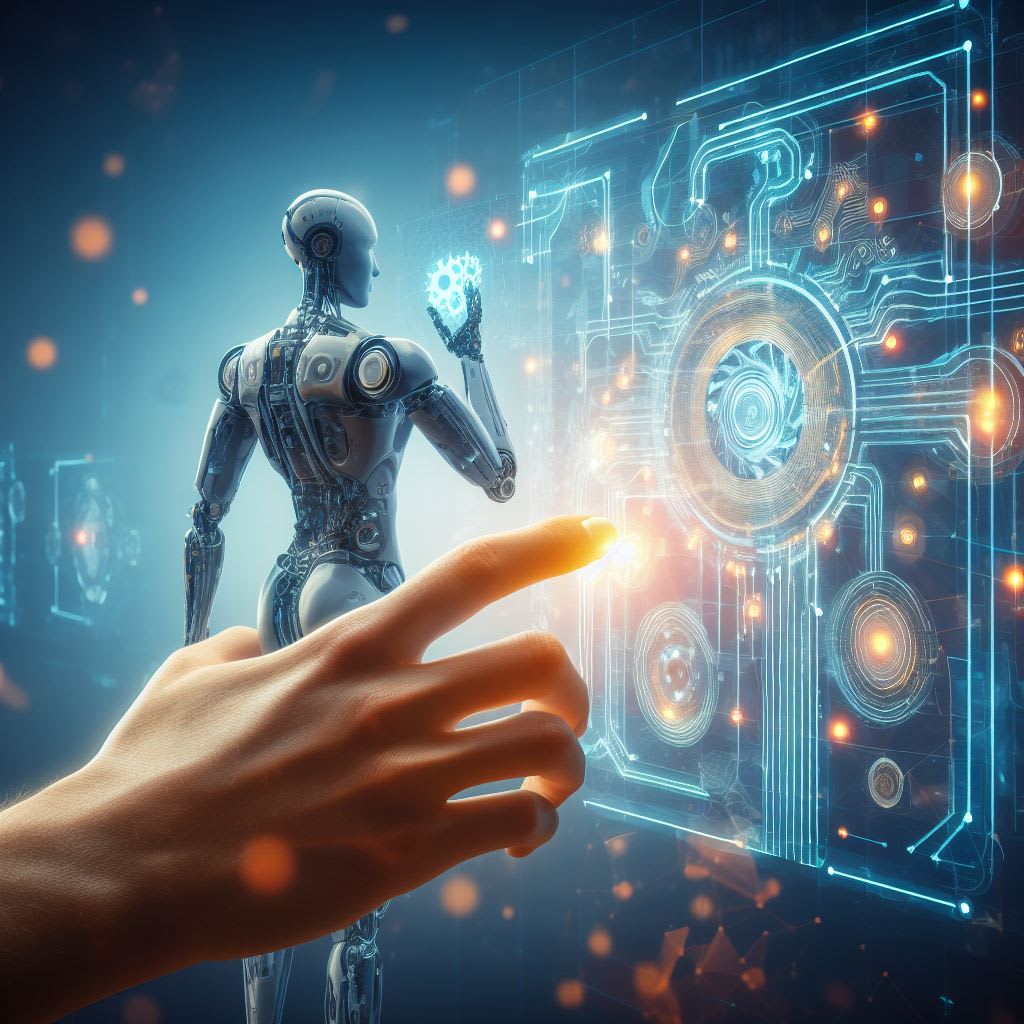

Although we can’t seem to escape the buzz around Gen AI, Gartner is predicting that we’ve hit the peak of inflated expectations in the hype cycle. From here on, we are going to see a steep fall followed by a realization that Gen AI is really good at certain tasks and not the best tool for others.

[Source: Gartner Hype Cycle]

[Source: Gartner Hype Cycle]

My Learnings

With this background, let’s take a whirlwind tour of different things that I picked up at the conference.

Panel Discussion

In a panel discussion, the industry stalwarts had some sanguine observations.

- Unlike the technological progress in the past, the AI adoption will actually replace higher value jobs.

- However, new jobs that we’ve not heard about will start to appear. Maria quipped that a 8 month experienced prompt engineer is considered a tenured employee in this new field!

- Dennis Rotham observed that the job of programmers will remain. His quip - can you imagine someone sending a rocket to the moon with Gen AI created code?

- They also highlighted the fact that AI (not necessarily Gen AI) has been around for quite some time. It is present in all things like aircraft management, automobiles, manufacturing and so many industries. These deployments simply did not get so much attention.

Their suggestion were to:

- start small - start learning about the world of AI and Gen AI.

- Domain Experience is irreplaceable but AI skills can be automated away. Your tenure in industry still holds value.

- Folks who can translate the business requirement to Data Scientists and translate back the technical clarifications from Data Scientists to Business are in absolutely necessary. AI Managers are critical.

- When trying to implement a project, start small.

- Start with something that you know and can understand.

- These suggestions were almost the same ideas as the basics of agile programming!

Evolution of e-Commerce

The next session that I loved was by Somil Gupta on the possibilities of eCommerce evolution due to Generative AI. Unlike the gloom-and-doom scenario, this was refreshingly optimistic and covered a lot of ground.

- Understanding Intent: Current mechanism of search is not optimal. Due to the way the search is designed, there is a wide chasm between our intent and query. For example, we may search spiderman dress for 5 year old boy when our intent is to plan a birthday party in the theme of Spider Man for a 5 year old boy and 15 of his friends, including party supplies, T-Shirt and return gifts.

-

AI Assisted Commerce: Gen AI technologies can understand our intent better. They can think through the request and suggest steps and thus make the shopping experience less stressful. For example, a user could search for something like this:

I am attending an office party. I’d like to order a stylish red dress suitable for the occasion that makes a statement. My budget is 150-200 dollars.

With AI assisted search, this query can be broken down identify the features. Perhaps, the algorithm could ask clarifying questions like material desired, shipping preferences and then provide links to the dress.

- Product Personalization: Gen AI technologies when combined with other products like plugins can take personalization to a new level. For example, when a user tries to enroll for a course on AI, the system could extract the user’s LinkedIn and detect that they have not refreshed Math skills in 15 years. The system could come up with a hyper-personalized course with a 2-day introduction to Math skills followed by AI lessons tailored to the user’s domain.

- Other ideas were around systems where there is one orchestrator model that talks to other AI models for specific inputs, combines the results and presents in a comprehensible manner. For example, a financial advisor model that talks to a Bonds model, a Stocks model and so on.

I felt this was one of the best session that was looking into the future on what AI technologies can transform. Personally, I would have liked to see this as a Keynote session.

Prompt Engineering

Unlike the typical prompt engineering sessions where someone opens a ChatGPT interface and starts typing, the session by Valentina Alto took us under the hood on how GPT interprets the prompt. She then walked us through some advanced techniques to help design optimal user prompts. Here are some items that I noted down:

- Clear instructions are a must.

- If you have a complex query, break it down into sub-tasks.

- Force the system to slow down by asking it to explain it’s thinking, and seek justifications.

- Be descriptive on how you’d like the system to answer.

- Order within the prompt matters. Last few sentences have the most weight.

- Repeat important or critical items.

- Give the model a way to escape without hallucinating.

She then walked us through some advanced prompting concepts:

- Few show approach: Provide a few examples. This can help the model make it more purpose driven

- Use cues

- Use selection marks: Separation of example from prompt.

- Break down tasks: Especially useful for mathematical prompts.

- Chain of thoughts: Prompt the model to reason step-by-step. Take the o/p of previous step and use it as I/p for next step.

- ReACT: (Reason then ACT) Useful for working with agents. Kind of similar to Chain of thoughts.

For those of you who’d like to do deep, here are 2 papers that were suggested:

LLM Training Hands-On Sessions

I also attended 2 sessions by Dennis Rotham. I am not sure on the restrictions for sharing the code - so I’ll refrain from it. However, the primary take-away were:

- If you want to be in this field, focus on understanding the concept of Transformers. This is critical.

- The math may look daunting but it is not.

- Once the concept is clear, Dennis felt that the wow! factor of Gen AI would give away to of course!. And to the realization that there is really not Gen in Gen AI!

We also had sessions by Clint on security risks and mitigation steps when working with Gen AI. He introduced the OWASP Top 10 for ML and the mitigation strategies. A lot of these sounded like the standard but robust software engineering practices. One call-out for me was about the use of trained model. If a malicious actor were to feed bad data to a popular model, it can ingest this and produce wrong or harmful output.

If companies start to rely more on Open Source trained models, such kind of issues may rise in criticality.

Closing Thoughts

As a technology professional, my main thoughts at the end of the conference were the following:

- If we want to stay relevant, we need to understand some core concepts like Transformers.

- Instead of trying to learn the cheat codes of effective prompts, we should invest time to learn how the AI systems break down the queries. This can help us build more optimal prompts. Such a design may require us to build our out API+front-end instead of relying publicly available interfaces.

- Architects, Developers and Project Managers are very much a necessity. Domain Knowledge still has relevance.

- Enterprises may start to adopt more of the Open Source Models and deploy them within their own cloud environments. This can help prevent data loss or leaking secure information.

- Using technologies like LangChain will be akin to a sequential programming language. Each AI model may be like a function call and orchestrating them will deliver some business outcome.

- Gen AI is not equal to ChatGPT. There is a lot more to to it!

- Learn more about Hugging Face and the models being published on this platform.